LLMs, Thin Slices, and the Secret to a Great Scientific Presentation

Published on May 5, 2025 — 4 min read

If you’ve ever given a scientific presentation and noticed your audience mentally checking out before you hit slide three—congrats, you’ve experienced the brutal power of the "thin slice". That’s the term psychologists use for the quick judgments people make based on just a few seconds of observation. Turns out, those judgements are often surprisingly accurate, just maybe not in your favor.

For scientists of all levels, those first impressions matter a lot. Whether you’re defending your thesis, giving a job talk to potential employers, or pitching a new project to senior leaders, those first moments on stage are doing a lot of heavy lifting. Like it or not, people often decide whether you’re credible, confident, and competent before your science gets a chance to shine.

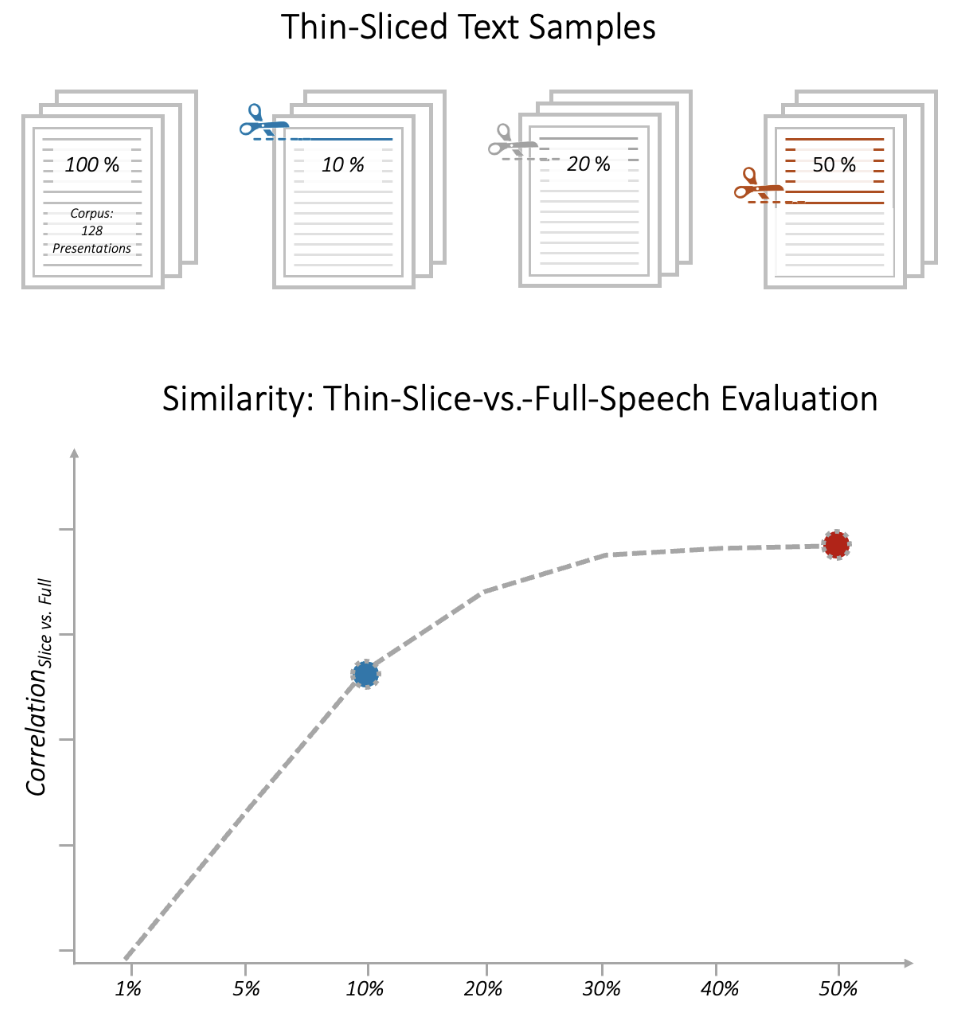

Now here’s where things get interesting: A recent study (Schmälzle et al. “The Art of Audience Engagement: LLM-Based Thin-Slicing of Scientific Talks.” arXiv, April 15, 2025) found that large language models (LLMs)—yes, the same tech that you might have used to polish your slides—can predict human ratings of presentation quality just from thin slices. Even better, this works reliably across different LLM models and prompts.

In other words, AI doesn’t just help you create your presentation. It can now help you test drive your delivery. Before you face a real audience, you could run a snippet of your talk through an LLM—a fast, low-effort way to gauge your audience’s first impression. Think of it as rehearsal with a brutally honest (but never judgmental!) AI colleague.

Of course, AI can’t replace storytelling, presence, or human connection—these are skills you build through practice, not shortcuts. So treat AI as an assistant: something to help you refine, reflect, and improve. The goal isn’t to sound like a machine—it’s to become a better version of yourself.

- Scientific PresentationGenerative AILanguage Models