Building AI Agents for Chemistry — Lessons from ICLR 2025

Published on April 29, 2025 — 4 min read

What do PChem exam, retrosynthesis, and zeolite design have in common? In 2025, ChatGPT can take a swing at all three. While that might still be a stretch, the International Conference on Learning Representations (ICLR2025) showed just how far state-of-the-art large language models (LLMs) like GPT have come in chemistry. The six papers outlined below capture the breadth of what’s now possible.

| Paper | Key Takeaways |

|---|---|

| Efficient Evolutionary Search Over Chemical Space with Large Language Models | LLM-guided crossover and mutation accelerate evolutionary search, reaching better molecules with far fewer costly evaluations. |

| ChemAgent: Self-updating Memories in Large Language Models Improves Chemical Reasoning | A self-updating library of solved sub-tasks gives the model memories it can mine to decompose and solve new chemistry problems. |

| OSDA Agent: Leveraging Large Language Models for De Novo Design of Organic Structure Directing Agents | Action–Evaluation–Reflection loop couples GPT with chemistry tools to iteratively craft new organic molecules for zeolite design. |

| RetroInText: A Multimodal Large Language Model Enhanced Framework for Retrosynthetic Planning via In-Context Representation Learning | Combine reaction narratives with 3-D embeddings to enable context-aware retrosynthetic planning across long pathways. |

| MOOSE-Chem: Large Language Models for Rediscovering Unseen Chemistry Scientific Hypotheses | Multi-agent pipeline mines literature, synthesizes inspirations, and ranks fresh ideas to discover novel chemistry hypotheses. |

| MatExpert: Decomposing Materials Discovery by Mimicking Human Experts | LLM-driven retrieve-transition-generate workflow imitates materials experts to propose valid, stable solid-state candidates. |

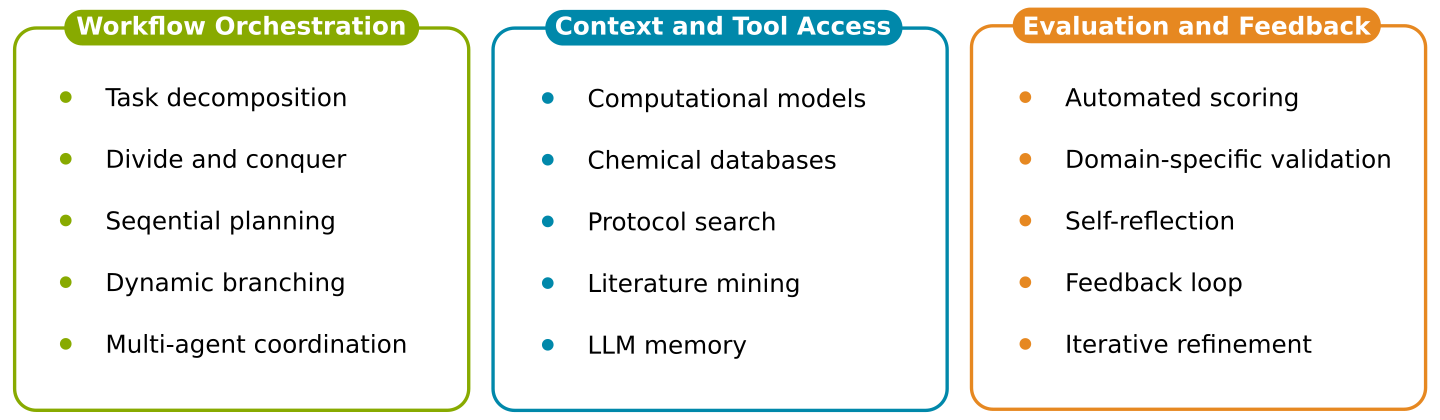

Scanning the six papers side-by-side, a clear pattern emerges: each team embraces an agentic design, embedding the LLM as a core component of a broader, structured workflow. Across these systems, three core pillars of the agentic approach consistently stand out:

-

Every system builds a structured workflow that blends LLM-driven steps with domain-specific tools and operations. In some cases, the workflow is explicitly scripted: OSDA Agent follows a fixed Actor–Evaluator–Reflector loop for de novo molecule design, while MatExpert breaks material discovery into retrieve–transition–generate stages. In others, the LLM dynamically orchestrates the sequence based on the task at hand, as seen in Efficient Evolutionary Search, where LLM-suggested mutations are evaluated externally before deciding the next move. Either way, the core idea is the same: the LLM doesn’t work in isolation—it collaborates both its own reasoning and external computation as part of a larger, multi-step process.

-

Providing the LLM with rich chemistry context is essential, whether by linking to external resources or by dynamically constructing internal knowledge. RetroInText enhances retrosynthetic planning by fusing reaction narratives with 3-D molecular embeddings, while MOOSE-Chem dynamically mines the scientific literature for inspiration papers to frame hypothesis generation. Alternatively, ChemAgent implicitly builds a dynamic internal context through its memory system, recalling sub-solutions to inform new problems. Across these designs, it’s clear that successful agents supplement plain text prompts with structural, relational, and historical chemistry knowledge—mirroring how human chemists reason with both memory and references.

-

Feedback loops and self-reflection are now standard tools for iteratively improving outcomes and maintaining chemical validity. OSDA Agent explicitly incorporates an evaluate–reflect–refine cycle, where performance feedback shapes subsequent molecule proposals. In MOOSE-Chem, multi-agent collaboration enables ranking and filtering of generated hypotheses based on plausibility and novelty. These mechanisms allow LLM-based agents to self-correct, absorb new information, and steadily improve their outputs—moving closer to the disciplined iterative thinking expected in real-world chemical research.

For industrial practitioners, these papers mark a turning point—from asking "Can LLMs do chemistry?" to confronting "How do we build and deploy our custom chemistry agents?" Together, they demonstrate that modern LLMs are powerful knowledge sources and reasoning engines capable of tackling real-world chemistry challenges, when embedded within structured workflows and integrated with broader R&D digital ecosystems.

- AI AgentGenerative AILanguage ModelsChemistry